High Level Architecture of Hadoop cluster

As we already know Hadoop is an open-source software framework for storing data and running applications on clusters of commodity hardware.

What is commodity Hardware?

Commodity Hardware is really inexpensive affortable and easy to obtain Computers

Why is Hadoop important?

Hadoop is importent because of its ability to store and process huge amounts of any kind of data with the desired throughput. It is also capable to handel the data whose volume and verity is constently increasing and changing.

Hadoop cluster is capable to deliver as high computing power as required. For more processing power you just need more computers.

Another charactristic of Hadoop is to provide full fault tolerance although the individual computers are fault prone. How ? will see in this post later when we will discuss about HDFS.

Together with the above listed adventage Hadoop is really flexible, lowcost and really scalable.

These adventages of Hadoop making it famous in the world of Big Data.

I will discuss more in detail how Hadoop is achieving all these features but first let us see the core component of Hadoop

Core Hadoop Components

As you can see in the figure the core components of Hadoop are

- Hadoop Common

- Hadoop Distributed File System (HDFS)

- MapReduce

and

- Data Access/Data Processing/Monitoring and Management Components

Hadoop Common

Hadoop common is a collection of components and interfaces that supports other hadoop module. For example, if HBase and Hive want to access HDFS they need Java archives (JAR files) that are stored in Hadoop Common. Hadoop common also contains the necessary Java Archive (JAR) files and scripts required to start Hadoop.

Hadoop Distributed File System (HDFS)

HDFS is the default filesystem of Hadoop. Data on HDFS is stored as data blocks. As we can guess from the name Distributed File System, HDFS component creates several replicas of the data block which are distributed across different clusters for reliable and quick data access. checksum is calculated for the data blocks replicated on several computing nodes. In case of a checksum error or system failure, erroneous or lost data blocks can be recovered from backup blocks located on other nodes. This way Hadoop is able to recover any data loss. There are 3 component of HDFS

NameNode

DataNode

Secondary NameNode

What is NameNode? What is the purpose of NameNode?

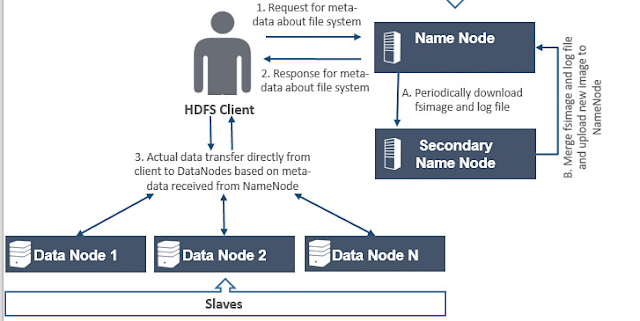

A NameNode in Hadoop stores metadata of actual data. e.g. filename, path, No. of Blocks, Block IDs, Block location, no. of replicas etc (see fig.1 above). Client applications talk to the NameNode whenever they wish to perform certain data operation eg. read, write etc.

NameNode knows the list of the blocks and its location for any given file in HDFS. With this information NameNode knows how to construct the file from blocks. When MasterNode receives a request from client application using its Metadata it drives DataNode to perform the operation.

NameNode is so critical to HDFS and when the NameNode is down, HDFS/Hadoop cluster is inaccessible and considered down.

NameNode is a single point of failure in Hadoop cluster and therefore we should consider the hardware and redundency options for NameNode while desining and Implementing Hadoop Cluster.

NameNode is usually configured with a lot of memory (RAM). Because the block locations are held in main memory.

NameNode is responsible for operations like opening, closing, and renaming files and directories.

What is DataNode ?

A DataNode stores data in HDFS file formate.It is responsible for serving read and write requests from the file system’s clients. The DataNodes also perform block creation, deletion, and replication upon instruction from the NameNode. See fig. 2 above

What is Secondary NameNode?

Secondary NameNode in hadoop is a specially dedicated node in HDFS cluster whose main function is to take checkpoints of the file system metadata present on namenode.

To eleborate little more about the purpose of Secondary NameNode let us go little bit technical with NameNode.

As explained above Namenode holds the metadata for the HDFS like Namespace information, block information etc. When in use, all this information is stored in main memory. But these informations are also stored in disk for persistence storage. The files used by NameNode to store data on disk are called fsimage and Edit logs

Fsimage - Its the snapshot of the filesystem when namenode started.

Edit logs - Its the sequence of changes made to the filesystem after namenode started.

Edit logs are applied to

fsimage only when the NameNode is restarted and NameNode resart is rare, which means edit logs can grow very large and may become unmanagible. Also as we just learned Edit Logs are applied to fsimage at the time of NameNode restart. Larger the editlog longer the restart time of NameNode. So to overcome these issues we need a mechanism which will help us reduce the edit log size to make it managible and also keep the fsimage data up to date. And the job is assigned to Secondary Namenode. At this point we can also conclude that the Secondary Namenode whole purpose is to have a checkpoint in HDFS and its not a backup Node of NameNode.

Data Access/Data Processing/Monitoring and Management Components in Hadoop

What is HBase ?

Apache HBase is a NoSQL database that runs on top of Hadoop as a distributed and scalable big data store in Hadoop framework. HBase is a powerful database in its own.

HBase provides two way of data access

- Through their row key or via a table scan for a range of row keys

- In a batch manner using map-reduce

This dual-approach to data access is something that makes HBase particularly powerful.

What is Mahout ?

Mahout is one who drives elephant, in this sence Mahout in Hadoop is something which drives Hadoop (the logo of Hadoop is Elephant).

Mahout is an algorithm library for scalable

machine learning on Hadoop. It runs on top of Hadoop and usages MapReduce paradigm.

What is Machine Learning?

Machine learning is a discipline of artificial intelligence focused on enabling machines to learn without being explicitly programmed, and it is commonly used to improve future performance based on previous outcomes.

After the Data stored on Hadoop Distributed File System (HDFS), Mahout provides the data science tools to automatically find meaningful patterns in those big data sets and usages this information to make it faster and easier to turn big data into big information.

If you want to learn more about Mahout just go through

http://mahout.apache.org/

What is Pig ?

Pig is a high level scripting language that is used with Apache Hadoop. It enables, to write complex data transformations without knowing Java. Pig’s simple SQL-like scripting language is called Pig Latin. We can perform all the data manipulation operations in Hadoop using Pig.

If you want to learn more about Pig navigate through following links

https://pig.apache.org/

https://www.tutorialspoint.com/apache_pig/

What is Hive ?

Hive is a data warehouse infrastructure tool to process structured data in Hadoop. You can develop SQL type scripts to do MapReduce operations using Hive. Hive Usages SQL type language to process/query data, is called HiveQL or HQL

If you want to learn more about Hive go through following urls

https://hive.apache.org/

https://www.tutorialspoint.com/hive/hive_introduction.htm

What is ZooKeeper ?

Zookeeper is an open source server that reliably coordinates distributed processes and enables synchronization across a cluster. Zookeeper in Hadoop can be viewed as centralized repository where distributed applications can put data and get data out of it. It is used to keep the distributed system functioning together as a single unit, using its synchronization, serialization and coordination goals.

If you want to learn more about ZooKeeper go through

https://zookeeper.apache.org/

download Zookeeper from

https://zookeeper.apache.org/releases.html

What is Oozie ?

Oozie is the tool in which all sort of programs can be pipelined in a desired order to work in Hadoop’s distributed environment. Oozie also provides a mechanism to run the job at a given schedule. In simple terms you can say Apache Oozie is a Workflow Scheduler and coordination Service for managing Hadoop jobs.

If you want to learn more about Oozie go through

http://oozie.apache.org/

What is Sqoop ?

If you want to transfer the data from an RDBMS system to Hadoop and vice versa you need Sqoop.

learn more about Sqoop at

http://sqoop.apache.org/

What is Flume ?

Flume is a service used to collect, aggregate and move a large amount of log data

learn more about Flume at

https://flume.apache.org/

What is Avro ?

Avro is a language-neutral data serialization system in Hadoop. Avro uses JSON format to declare the data structures. Presently, it supports languages such as Java, C, C++, C#, Python, and Ruby.

learn more about Avro at

https://avro.apache.org/

download Avro from

https://avro.apache.org/releases.html

What is Ambari?

Ambari is a management platform for provisioning, managing, monitoring and securing Apache Hadoop clusters.

Learn more about Ambari at

https://ambari.apache.org/

The List can go long and long but I am taking a paue here and going ahead to discuss a little bit about our beloved MapReduce

MapReduce

What is Map and Reduce in Hadoop ?

As the name suggest MapReduce in Hadoop is basically an algorith based on JAVA, contains two important tasks, namely Map and Reduce. A MapReduce job usually splits the input data-set into independent chunks which are processed by the map tasks in a completely parallel manner. The framework sorts the outputs of the maps, which are then input to the reduce tasks. Typically both the input and the output of the job are stored in a file-system. The framework takes care of scheduling tasks, monitoring them and re-executes the failed tasks.

Want to know more about MapReduce just click at

https://hadoop.apache.org/docs/r1.2.1/mapred_tutorial.html

I will discuss MapReduce workflow and other related Importent Hadoop terminolgie in my Next post.

Till then Have a Nice Time and Enjoy Learning Hadoop with me.